Understanding limitations of LLMs

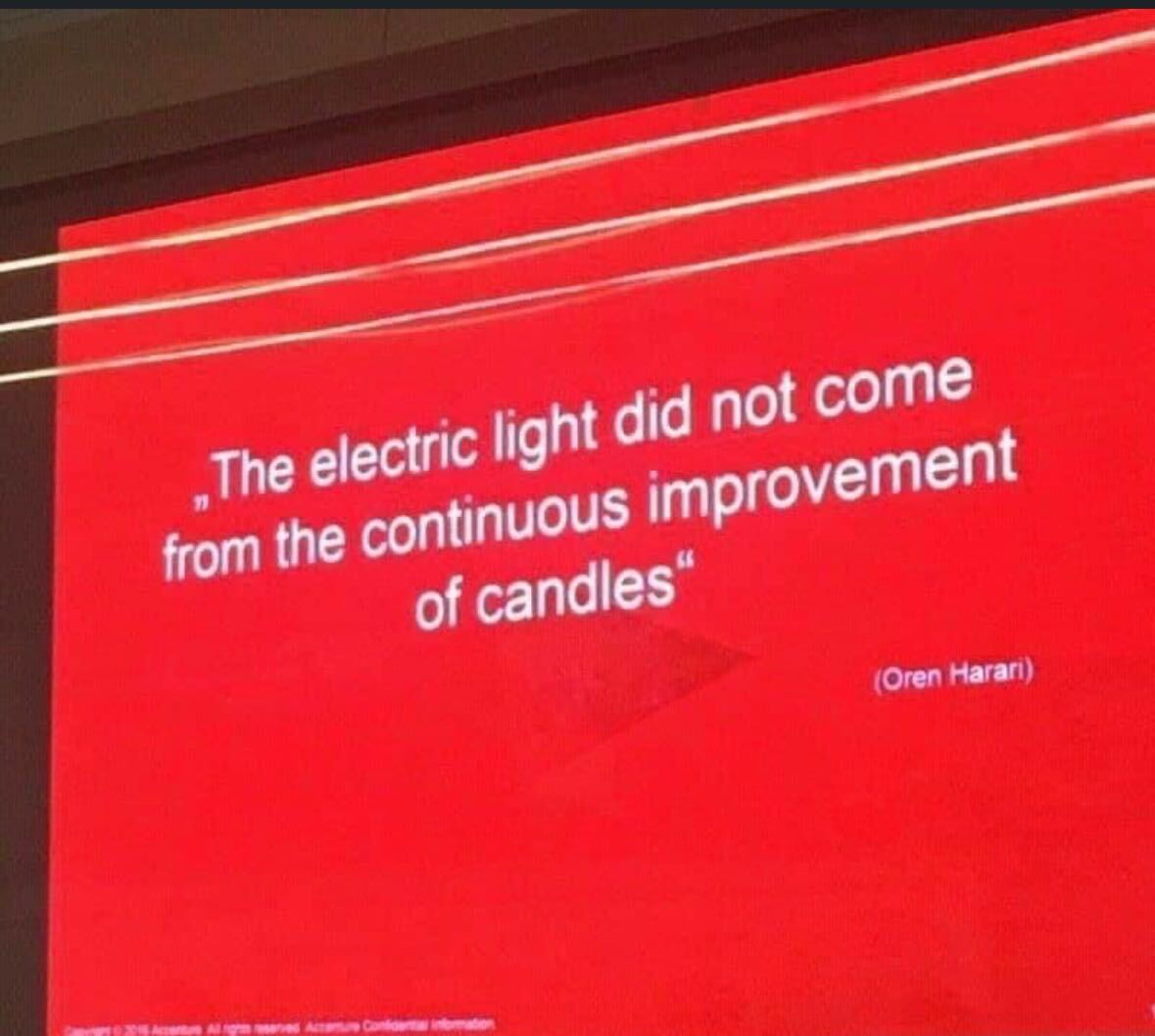

Since ChatGPT’s launch in November 2022, the field of auto-generative intelligence has made remarkable progress. I still vividly recall my first conversations with ChatGPT, where it crafted a rhyming poem, leaving me completely “awe-struck.” Now, we frequently rely on it to generate code, develop software, automate tasks, and much more. Every few months, we witness the release of newer versions of LLM models, featuring larger context windows and training on billions of tokens. This led me to ponder how these systems will evolve in the coming years, with seemingly limitless potential. However, is simply improving LLMs the right path forward? It reminds me of a saying I once came across: “Electric lights were not invented by continuously improving candles.”

It seems the AI models powering our growth today are in fact fundamentally limited. This is not my revelation, but, I came to this understanding from listening to few pioneers I follow in this field. Most notably, a lecture from Yann LeCun and Prof. Fei-Fei Li.

Yan LeCun seem to have had his reservations since the beginning against LLMs as a mode through which we will achieve AGI (whatever it is). He is pioneer in creating CNN (Convolution Neural Network) for building intelligence through vision. Therefore, his resistance to LLMs - building intelligence through language, might be little biased to his interest.

But, when I thought about it it more closely, they both had a valid point. Language is not “natural” - it is artificially created by humans for information sharing and communication. It was human intelligence that created “languages” (perhaps more than we needed). LLMs can be trained to understand and communicate in these languages, but can they create a new language? Consider this research story, where researchers created an exclusive social media platform for AI bots, only to discover that it quickly turned into an echo chamber with no fresh contributions.

As Prof. Li pointed out in the podcast, there’s a 3D world out there, naturally existing, that every living organism learns to interact with. Our existing AI systems need an interface to that 3D world for modeling, understanding, and interacting with it. That 3D world is much more random and non-deterministic than semantically built, predictable languages.

Maybe we are just at the beginning of the AI evolution ?